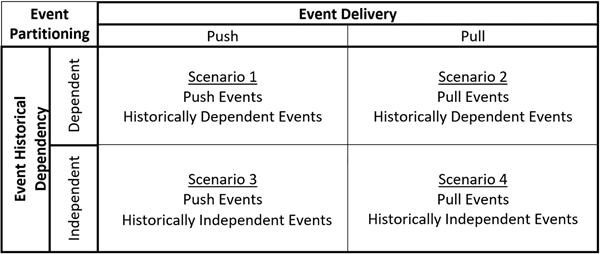

One of the key concepts to consider when planning for and designing the system architecture for ArcGIS GeoEvent Server system is workload separation; in GeoEvent Server terminology, this is known as event partitioning. With event partitioning, each event stream should be placed into the correct box in the table below, and an ideal production system architecture should plan to address each cell using a separate GeoEvent Server system. Using this strategy, an optimized system can be deployed to address the specific challenges of differing event streams. The optimal system architecture will need to accommodate the complexities of system administration, licensing, and hardware costs.

In a production environment, you should strive to partition event streams common to each box on their own separate GeoEvent Server machine. There is a total of four possible scenarios to consider, however, additional partitioning strategies discussed below may increase the number of scenarios. The concepts that make up each of the base scenarios are summarized and discussed in further detail below.

- Scenario 1 – Push Events + Historically Dependent

- Scenario 2 – Pull Events + Historically Dependent

- Scenario 3 – Push Events + Historically Independent

- Scenario 4 – Pull Events + Historically Independent

Event Delivery

GeoEvent Server received event streams using either a push or pull.

Push Events

When using the Receive Text from a TCP Socket or Receive JSON on a REST Endpoint inputs connectors, as an example, the data is being pushed to GeoEvent Server. Once the input is created, it waits for data to arrive. The data source generates the data and pushes each event (or set of events) to GeoEvent Server. This distinction is important because it allows a system architect to design a system that intercepts the incoming messages and routes them according to a chosen strategy.

Pull Events

When using the Poll an External Website for XML or Poll an ArcGIS Server for Features input connectors, as an example, GeoEvent Server is pulling (or polling) event data. Periodically, a request is sent to the data source, and the response includes one or more events to be processed. When polling for data, there is no opportunity to intercept the messages prior to them arriving at GeoEvent Server. In this case, it is important to consider architectural strategies for partitioning the data and for handling duplication of events.

Event Historical Dependency

Your choice of a highly available architecture may be influenced by the nature of the historical dependency of your event data.

Historically Independent Events

Most real-time processing and analysis performed in GeoEvent Server is historically independent. Each event is processed individually without any knowledge of prior events. Each event is treated as an independent entity and can be processed on any GeoEvent Server instance with an identical configuration. Traditional strategies for workload balancing of events across multiple machines can work well in these situations.

Historically Dependent Events

Some real-time processing and analysis performed in GeoEvent Server requires knowledge of previous events such as the enter and exit spatial operations. Both require knowledge of the previous event’s location before an accurate result can be determined on the current event. Since GeoEvent Server does not provide a means for sharing this historical event context between machines, it is imperative that events are routed to a machine that has previous knowledge of that event (based on its Track ID). Regarding system architecture, a highly available system must be designed to allow events to be routed based on their Track ID.

Additional workload separation considerations

In addition to the above strategies for partitioning events across different systems, it is important to also review the nature of the data itself. Oftentimes, you will find that different teams, projects, or the geographic location of the event data can provide additional insight into how to partition the data. For example, different teams or projects may have different requirements for data processing or if data is geographically located over a large area, it may be partitioned down into regions.

Event loads

When partitioning your event streams, the first concept that needs to be taken into consideration is event loading. An event stream’s load is defined not only by the method and rate at which data arrives in a input connector, but also the number of events that arrive over a specific period of time. It is recommended you partition event streams onto separate GeoEvent Server systems based on their specific event load type; even loads versus uneven loads. During event processing, uneven loads may require more system resources and cause resource contention on the even stream load. For this reason, it is recommended you separate your even and uneven load event streams on two different systems.

Even event loads

Even event loads deliver events to a GeoEvent Server input at a consistent, even pace. An even event load is typically sent from systems generating data at a constant rate. For example:

- An automated vehicle location (AVL) event stream where each vehicle is reporting its location every 30 seconds.

- A stream gauge network where each gauge reports stream height data every 60 minutes.

When processing even event loads, expect GeoEvent Server to operate at a constant level. Spikes in CPU or memory would not be expected in this case; therefore, the system should be designed for an even load.

Uneven event loads

With uneven event loads, events arrive at a GeoEvent Server input at an uneven rate. These surge type event loads can cause large numbers of events to be queued up in an input’s message queue waiting to be processed. For example:

- A lightning tracking system that reports lightning strikes across a region.

- A rental scooter system that pushes scooter status when each scooter is in service.

When processing uneven event loads, expect system resources to be heavily utilized at the arrival of each surge event set. During this time, CPU may spike to 100%, memory may temporarily increase, and the Kafka topics (message queues) will require significantly more disk space (as much as 3 or 4 times the default). During these processing times, other event streams on the system may be starved for resources as GeoEvent Server works through the large number of events. Depending on your objectives for processing this data, a system can be designed to process the surge of events in just enough time before the next surge arrives (minimum system requirements; just in time processing). Alternatively, additional resources can be allocated to the system to allow event processing at some desired maximum rate (maximum system requirements; critical event processing).

Vous avez un commentaire à formuler concernant cette rubrique ?